Wiz Research Uncovers DeepSeek Security Lapse

In one of the most significant cybersecurity incidents, Chinese AI startup DeepSeek left a large database containing highly sensitive information like user chat histories, API keys, backend details, and system logs open. The breach was discovered by cybersecurity firm Wiz Research, which promptly disclosed the issue to DeepSeek. The company promptly restricted public access and secured the database.

The Discovery of “DeepLeak”

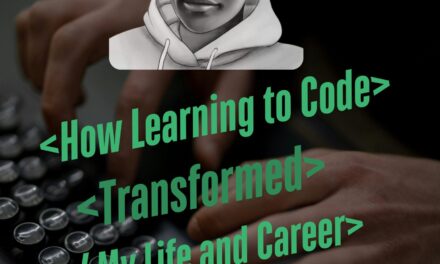

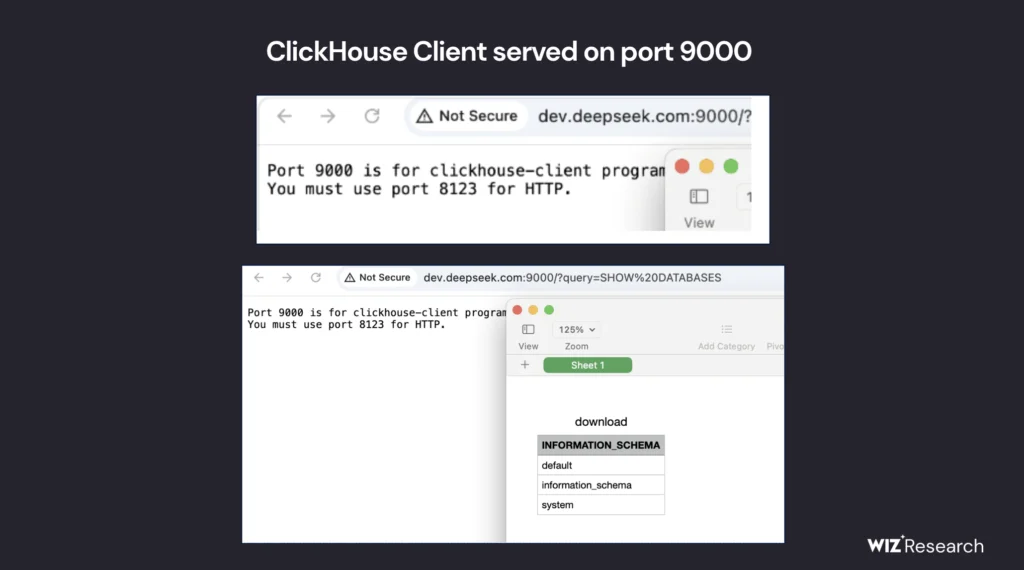

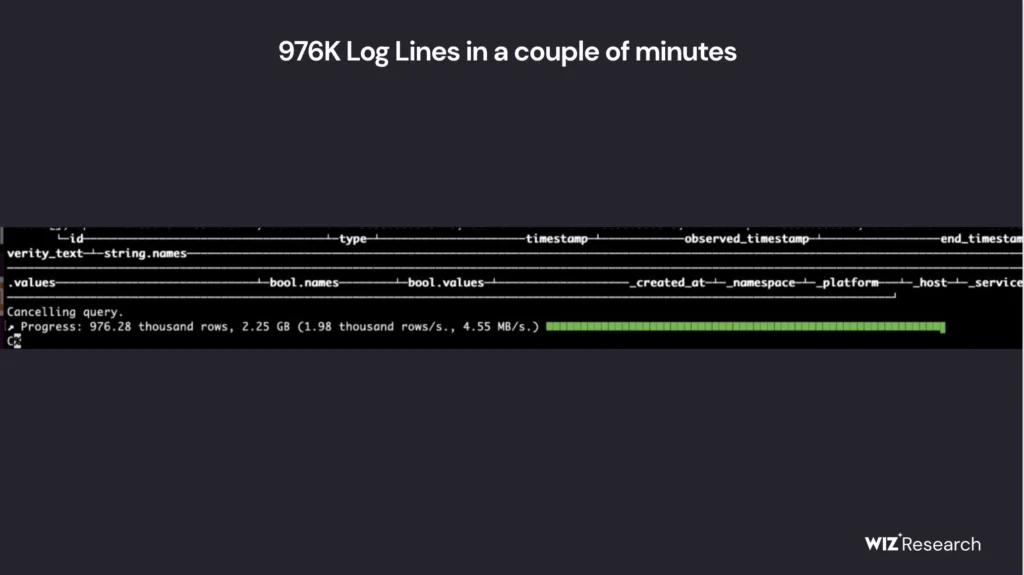

The exposed database was discovered by Wiz Research while performing an external security posture review of DeepSeek. Researchers led an investigation showing a ClickHouse database open to the public hosted at oauth2callback.deepseek.com:9000 and dev.deepseek.com:9000. This no-auth database contains over one million log entries, including plaintext chat messages, operational metadata, and references to internal API endpoints.

To do so, the Wiz researchers simply ran several simple SQL queries against ClickHouse’s HTTP API and got themselves a full set of datasets – confirmation that highly sensitive information was laid bare. That “log_stream” table contains:

- Timestamps dating back to January 6, 2025

- Chat history and API keys stored in plaintext

- Backend service details that could allow unauthorized access to DeepSeek’s infrastructure

- Operational metadata that exposed the inner workings of DeepSeek’s AI models and backend architecture

Potential Risks and Industry Implications

This level of exposure posed a critical security risk, not just to DeepSeek but also to its users. If exploited, the breach could have allowed attackers to:

- Exfiltrate sensitive logs, including confidential chat data

- Steal plaintext API keys and use them for unauthorized access

- Escalate privileges within DeepSeek’s infrastructure

- Extract proprietary AI model information and other intellectual property

In itself, this is one of the growing concerns in the AI business today: while companies forge ahead to develop and deploy their AI technologies, security really does lag behind. Sometimes, in focusing on innovation, companies compromise on sound security protocols and leave their critical systems open to cyber threats.

Lessons for the AI Industry

DeepSeek’s database leak serves as a cautionary tale for AI startups and enterprises alike. Key takeaways from this incident include:

- Security-first approach: AI companies must prioritize securing their infrastructure, ensuring that sensitive data is protected against unauthorized access.

- Regular security audits: Continuous monitoring and vulnerability assessments should be standard practice to detect and mitigate risks before they become major breaches.

- Authentication and access controls: Publicly accessible databases without authentication create severe risks. Strong authentication mechanisms must be implemented to prevent unauthorized access.

- Encryption of sensitive data: Storing chat histories, API keys, and backend details in plaintext significantly increases the risk of data leaks. Encrypting such data can prevent unauthorized access even if a system is compromised.

Conclusion

The DeepSeek data leak has raised red flags on the dire need for increased security in the AI industry. While AI companies are racing to create state-of-the-art models, they cannot afford to overlook how to protect the data. Cybersecurity should be part of AI development to ensure sensitive user data and proprietary information remain secure.

As AI systems are increasingly ingrained in doing business and everyday living, security breaches like this pop up to starkly remind us that innovation without security is a disaster. It is high time the artificial intelligence industry started taking some serious steps toward robust security frameworks in order to regain the lost trust of its users worldwide.